Bio

I was working at xAI to help launch Grok 4, contributing to DeepSearch, Search Agents, X Agents and @grok, covering human and synthetic data as well as evaluation and products. I also work as a founding member and scientist at Amazon Generative Foundational AI, building LLMs from scratch for Amazon (i.e. Rufus), focusing on multiple aspects of LLMs [Blog: Reproduction and Usage of GPT3/ChatGPT], including 1) pretraining (data, infrastructure, scaling laws) 2) post-training (instruction tuning, human and AI preference learning, reasoning and agentic RL) 3) evaluation 4) language agents (tool using, planning and reasoning, long-context handling) 5) alignment and AI safety [Blog: AI Safety: Why, What, and How].

I received Computer Science degrees from Georgia Tech and Peking University. My advisor was Prof. Diyi Yang, currently at Stanford University. I also did research at Google and Microsoft previously.

The major question I'm thinking about is where is the boundary between LLM capability and alignment [Blog: Capability and Alignment].

Before the era of LLMs, I studied Natural Language Processing [Blog: NLP Trends from the perspective of LLM, i.e. summary of ACL 2022 (in Chinese)], Machine Learning, and large-scale pretraining of unified multimodal, multitask, multilingual Transformer models [Repo: Paper List of Multi-modal Deep Learning].

My previous work on semantic parsing, compositional generalization, text generation, code generation, multilinguality and multi-modality makes me particularly interested in langauge agents, reasoning and grounding.

Our Amazon Team also has research intern / fulltime oppenings in Generative AI. If you are interested, shoot me an email.

News

Aug 11th, 2024: New paper on LLM Inductive v.s. Deductive Reasoning reported by Forbes. [Paper] [Forbes Media Coverage]

March 16th, 2024: We are hosting KDD Cup 2024: Multi-Task Online Shopping Challenge for LLMs. Welcome to participate! Although I personally believe LLMs are generalists and we should not optimize them towards any tasks, an AI Shopping Assistant is still useful (e.g. as a Language Agent)!

Feb 14th, 2024: New Blog Post on "Capability or Alignment? Respect the LLM Base Model's Capability During Alignment". [Blog] [Tweets]

May 9th, 2023: New Blog Post on "AI Safety: Why, What, and How". [Blog] [Tweets]

April 29th, 2023: New survey "Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond" is on arXiv. [Paper] [Repo] [Yann LeCun's Post]

Feb 13th, 2023: New Blog Post on Reproduction and Usage of GPT3/ChatGPT. [Blog] [Blog (in Chinese)] [Tweets]

Blog

- Jingfeng Yang. 2024. Capability or Alignment? Respect the LLM Base Model's Capability During Alignment. Blog Post, Feb 2024. [Blog] [Tweets]

- Jingfeng Yang. 2023. AI Safety: Why, What, and How. Blog Post, May 2023. [Blog] [Blog (in Chinese)] [Tweets]

- Jingfeng Yang. 2023. Why Did All of the Public Reproduction of GPT-3 Fail? In Which Tasks Should We Use GPT-3.5/ChatGPT? Blog Post, Feb 2023. [Blog] [Blog (in Chinese)] [Tweets]

- Jingfeng Yang. 2022. NLP Trends from the Perspective of LLM, i.e. Summary of ACL 2022. Blog Post, July 2022. [Blog (in Chinese)]

- Jingfeng Yang. 2022. The Dark Matter towards AGI: Compositional Generalization. Blog Post, April 2022. [Blog (in Chinese)]

- Jingfeng Yang. 2022. TableFormer: Robust Transformer Modeling for Table-Text Encoding. Blog Post, March 2022. [Blog (in Chinese)]

Publications

Selected Work

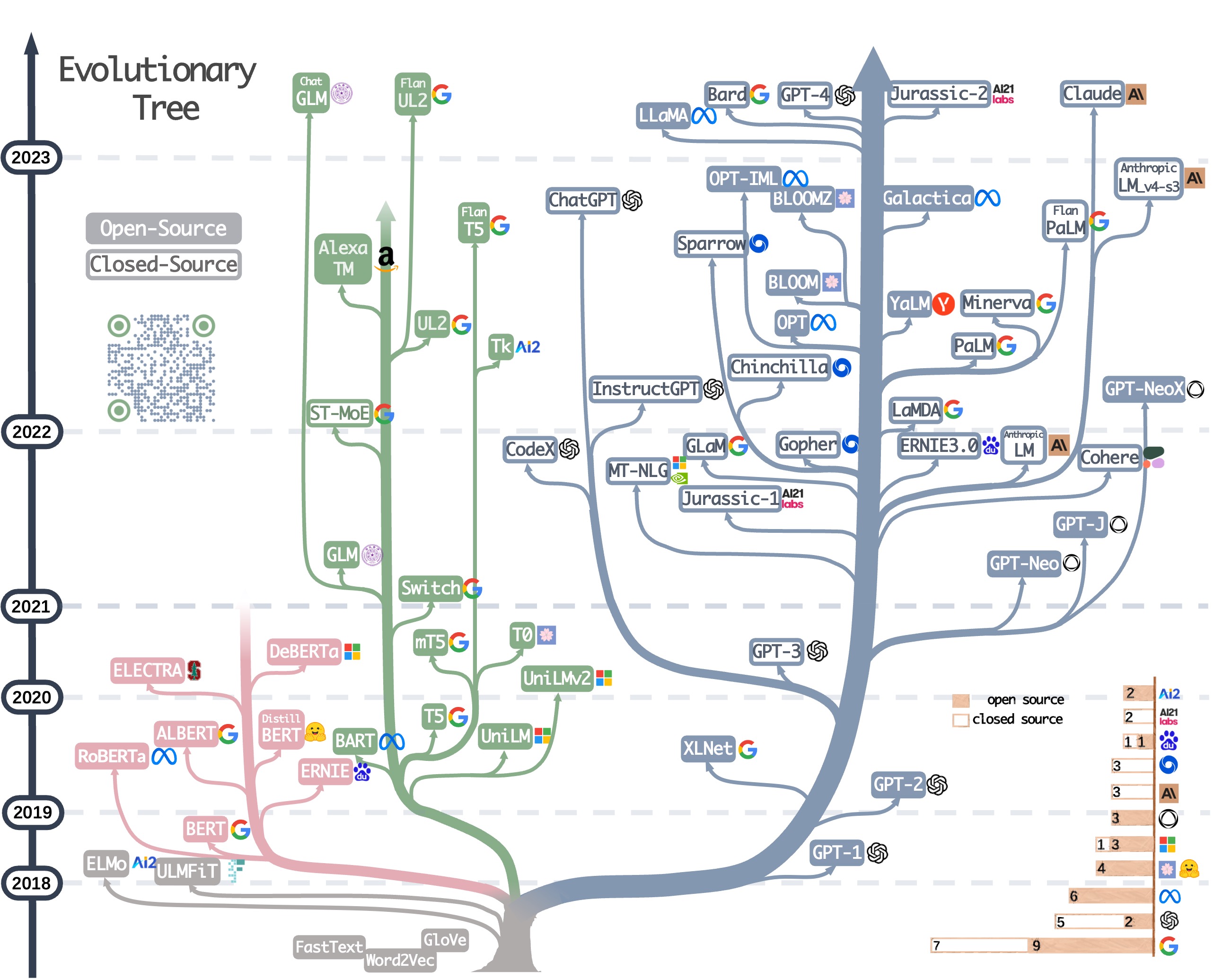

- Jingfeng Yang*, Hongye Jin*, Ruixiang Tang*, Xiaotian Han*, Qizhang Feng*, Haoming Jiang, Bing Yin, Xia Hu. 2023. Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond. TKDD 2024. [Paper] [Yann LeCun's Post] [Based on my Blog Post] [Repo] Star. Figure of LLM Evolutionary Tree (Credits to Hongye Jin):

- Jingfeng Yang, Haoming Jiang, Qingyu Yin, Danqing Zhang, Bing Yin, Diyi Yang. 2022. SEQZERO: Few-shot Compositional Semantic Parsing with Sequential Prompts and Zero-shot Models. In NAACL' 2022 (Findings). [Paper] [Code] [Slides] [Blog (in Chinese)]

-- 1) The first one (Jan 16th, 2022 on OpenReview) to propose Problem Decomposition and Sequential Prompting LLMs for better compositional generalization, even before Chain-of-Thought Prompting and Least-to-Most Prompting, although CoT and Least-to-Most prompting could achieve better performance with larger LMs in a wider range of tasks :). 2) The first one to try model merging (model weight ensemble) with LLMs for generation tasks (refer to notes in related work). 3) Proposed to combine constrained rescaling (constrained decoding) with model ensemble to make ensemble work for LLM generation. - Jingfeng Yang, Aditya Gupta, Shyam Upadhyay, Luheng He, Rahul Goel, Shachi Paul. 2022. TableFormer: Robust Transformer Modeling for Table-Text Encoding. In ACL' 2022 (Oral). [Paper] [Code] Star [Slides] [Blog (in Chinese)]

-- The first one to identify the vulnerability to row/column perturbations for prior table-text encoding models. Designed and pretrained a TableFormer model, where learnable attention biases could help achieve strict robustness.

Other Publications and Preprints

- Kewei Cheng, Jingfeng Yang, Haoming Jiang, Zhengyang Wang, Binxuan Huang, Ruirui Li, Shiyang Li, Zheng Li, Yifan Gao, Xian Li, Bing Yin, Yizhou Sun. 2024. Inductive or Deductive? Rethinking the Fundamental Reasoning Abilities of LLMs. ACL 2024 NLRSE Workshop. [Paper] [Forbes Media Coverage]

- Hongye Jin, Xiaotian Han, Jingfeng Yang, Zhimeng Jiang, Zirui Liu, Chia-Yuan Chang, Huiyuan Chen, Xia Hu. 2024. LLM Maybe LongLM: Self-Extend LLM Context Window Without Tuning. ICML 2024. [Paper] [Yann LeCun's Post] [Code] Star

- Yu Wang, Yifan Gao, Xiusi Chen, Haoming Jiang, Shiyang Li, Jingfeng Yang, Qingyu Yin, Zheng Li, Xian Li, Bing Yin, Jingbo Shang, Julian McAuley. 2024. MEMORYLLM: Towards Self-Updatable Large Language Models. ICML 2024. [Paper]

- Xin Liu, Zheng Li, Yifan Gao, Jingfeng Yang, Tianyu Cao, Zhengyang Wang, Bing Yin, Yangqiu Song. 2023. Enhancing User Intent Capture in Session-Based Recommendation with Attribute Patterns. NeurIPS 2023. [Paper]

- Caleb Ziems*, William Held*, Jingfeng Yang, Diyi Yang. 2022. Multi-VALUE: A Framework for Cross-Dialectal English NLP. ACL 2023. [Paper]

- Xutan Peng, Yipeng Zhang, Jingfeng Yang, Mark Stevenson. 2022. On the Security Vulnerabilities of Text-to-SQL Models. ISSRE 2023 (1 of 3 Best Paper Candidates). [Paper]

- Ruijie Wang, Zheng Li, Jingfeng Yang, Tianyu Cao, Chao Zhang, Bing Yin, Tarek Abdelzaher. 2023. Mutually-paced Knowledge Distillation for Cross-lingual Temporal Knowledge Graph Reasoning. In WWW' 2023. [Paper]

- Jingfeng Yang*, Le Zhang*, Diyi Yang. 2022. SUBS: Subtree Substitution for Compositional Semantic Parsing. In NAACL' 2022. [Paper] [Code] [Slides] [Blog (in Chinese)]

- Jingfeng Yang, Federico Fancellu, Bonnie Webber, Diyi Yang. 2021. Frustratingly Simple but Surprisingly Strong: Using Language-Independent Features for Zero-shot Cross-lingual Semantic Parsing. In EMNLP' 2021. [Paper] [Code] [Slides]

- Yang Zhong, Jingfeng Yang, Wei Xu, Diyi Yang. 2021. WIKIBIAS: Detecting Multi-Span Subjective Biases in Language. In EMNLP' 2021 (Findings). [Paper]

- Jingfeng Yang, Zhaoran Ma, Diyi Yang. 2020. Planning and Generating Natural and Diverse Disfluent Texts as Augmentation for Disfluency Detection. In EMNLP' 2020. [Paper] [Code] [Slides]

- Jingfeng Yang, Sujian Li. 2018. Chinese Discourse Segmentation Using Bilingual Discourse Commonality. Preprint. [Paper]

- Yizhong Wang, Sujian Li, Jingfeng Yang. 2018. Toward Fast and Accurate Neural Discourse Segmentation. EMNLP' 2018. [Paper]

- Yizhong Wang, Sujian Li, Jingfeng Yang, Xu Sun, Houfeng Wang. 2017. Tag-enhanced Tree-structured Neural Networks for Implicit Discourse Relation Classification. In The 8th International Joint Conference on Natural Language Processing (IJCNLP' 2017). [Paper]

Service

Reviewer/PC member in NLP Conferences: ACL Rolling Review, ACL' 2023, EMNLP' 2023, EMNLP' 2022, NAACL' 2021

Reviewer/PC member in ML Conferences: NeurIPS' 2022, ICML' 2023

Reviewer/PC member in AI Conferences: AAAI' 2023, IJCAI' 2023

Reviewer/PC member in Data Mining Conferences/Transactions: KDD' 2023, TKDE' 2022

Research Experiences

Research Assistant in College of Computing, Georgia Institute of Technology. Advisor: Diyi Yang. Aug 2019 - May 2021.

Visiting Researcher in Institute for Language, Cognition and Computation, The University of Edinburgh. Advisor: Bonnie Webber. July 2018 - Sep 2018.

Research Assistant in Department of Computational Linguistics, Peking University. Advisor: Sujian Li. July 2017 - June 2019.

Industry Experiences

Applied Scientist, Amazon, Palo Alto. Jan 2022 - present.

Applied Scientist Intern, Amazon, Palo Alto (Virtual). Mentors: Haoming Jiang, Danqing Zhang, Qingyu Yin, Bing Yin. Sep 2021 - Dec 2021.

Research Intern, Google, Mountain View (Virtual). Collaborators: Aditya Gupta, Shyam Upadhyay, Luheng He, Rahul Goel, Shachi Paul. July 2021 - Sep 2021.

Software Development Engineer Intern, Amazon, San Francisco (Virtual). May 2020 - July 2020.

Research Intern, Microsoft Research Asia, Beijing, China. Mentor: Jin-ge Yao. December 2018 - March 2019.

Talks

July, 2023: Gave several talks on Artificial Superintelligence: Autonomous Agent and Super-alignment at Peking University and WAIC. [Slides]

Sep, 2023: Gave several talks on LLMs: Practices, Paradigm Shifts, Remaining Challenges at Meta and Amazon. [Slides]

April, 2023: Gave several talks on Paradigm Shifts and Remaining Challenges Towards AGI at Google Brain and Michigan State University. [Slides]

March, 2023: Gave a talk on GPT series and NLP future directions at Peking University. [Slides]

Feb, 2023: Gave several talks on Reproduction and Usage of GPT-3/ChatGPT at Rice University, Texas A&M University, Intuit AI Research, BAAI etc. [Slides]

Nov 17th, 2022: Gave a talk on Table Understanding and Text2SQL in Alibaba DAMO Academy. [Slides]

Aug 4th, 2022: Gave a talk on Compositional Generalization in LLM Era at BAAI Seminar. [Slides]

Jun 10th, 2022: Gave a talk on TableFormer in A9 ML Talk Series. [Slides]

Mar 28th, 2021: Gave some lectures for NLP bootcamp as a TA.

Feb 15th, 2021: Gave a lecture on PyTorch as head TA in CS-4650 Natural Language Processing. [Slides]

Sep 2nd, 2020: Gave a lecture on Deep Learning as a TA in CS-4650/7650 Natural Language Processing. [Slides]

Teaching Experiences

Teaching Assitant for Coding Support, POLS-585 Text as Data, Emory University, Atlanta. June 2021 - July 2021.

Head Teaching Assistant, CS-4650 Natural Language Processing, Georgia Institue of Technology, Atlanta. Spring 2021.

Teaching Assistant, CS-4650/7650 Natural Language Processing, Georgia Institue of Technology, Atlanta. Fall 2020.

Teaching Assistant, CS-4650/7650 Natural Language Processing, Georgia Institue of Technology, Atlanta. Spring 2020.

Awards

May 4th Fellowship, 2016-2017.

Kwang-Hua Fellowship, 2015-2016.

Merit Student of Peking University, 2016-2017, 2015-2016.

Silver medalist in Chinese Mathematics Olympiad (CMO), 2015.

Intern Mentorship

Yuchen Zhuang, Georgia Tech. Summer 2024.

Zilong Wang, UCSD. Summer 2024.

Hongye Jin, Texas A&M University. Summer 2024.

Kewei Cheng, UCLA. Fall 2023.

Xiaotian Han, Texas A&M University. Summer 2023.

Yu Wang, UCSD. Summer 2023.

Hongzhi Wen, Michigan State University. Summer 2023.

Zhimeng Jiang, Texas A&M University. Fall 2022.

Zining Zhu, University of Toronto. Summer 2022.

Ruijie Wang, UIUC. Summer 2022.

Jie Huang, UIUC. Summer 2022.

Xin Liu, HKUST. Summer 2022.